Part of the reason I wrote that we’re probably as worried as we should be about climate change is to learn from my readers’ best objections. To be honest, I am somewhat disappointed by the effort so far. dreeves argued in favor of a carbon tax, but both I and the median American are basically in agreement already. No one wrote anything that would challenge the “close to the median on the non-worry side” position besides shakeddown, but I haven’t seen too much evidence for his two assertions:

- That the economic cost of climate change action is small.

- That the chance of mass extinction due to climate change is nontrivial.

Left-leaning CNBC quoted the economic cost of fighting climate change in the US as 2-4% of GDP. That could well be worth every penny, but it’s not a small amount. It also suggests that we might start seeing diminishing returns on the next billion dollars of effort.

On the other hand, there’s a looming disaster with a decent chance of wiping out humanity that we’re currently dedicating 0.00001% of world GDP to addressing. That’s 3% of what Americans alone spend on Halloween costumes for their pets. If you want to direct your marginal dollars towards saving humankind from annihilation, rather than towards saving dogkind from seasonal unfashionableness, I would encourage you to join me in donating to artificial intelligence alignment research.

The last time we raised money for a cause I believed in, I wrote it up myself. But there are so many great resources explaining AI alignment that I don’t want to get in the way.

If you don’t have all day, you can read a blog post with stick figure drawings, or a blog post with childhood photos. If you have all day, you can read the book. If you have just 14 minutes and also want to see a person who seems physiologically incapable of laughter try to tell a couple of jokes, you can watch this TED talk. If you got here from SlateStarCodex and only want to read stuff by Scott, you can read any of the dozens of posts by Scott.

I do hope you take the time to follow the above links, so I’ll limit the discussion here to the only thing I know in life: opinion distribution curves.

An important characteristic of the climate change opinion distribution curve is that the smarter opinions are closer to the middle than to the extremes. It’s not there aren’t stupid people holding opinions close to the median for stupid reasons, it’s that there isn’t a lot of intelligent discussion around the tails.

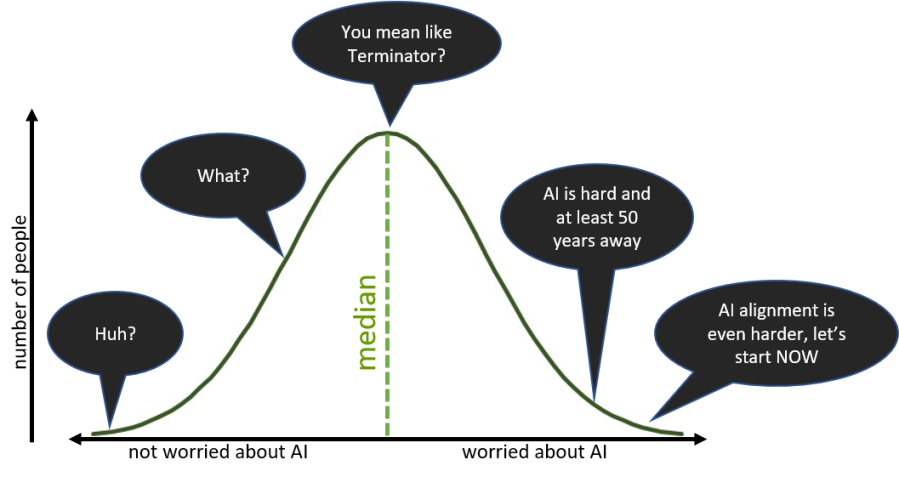

In contrast, the opinion distribution curve on AI looks like this:

As a society species, the sensible direction for our collective curve to move is to be a hell of a lot more worried about AI.

Fortunately, this seems to be happening. There’s a solid trend of smart people like Elon Musk, Bill Gates, Stephen Hawking, and a long list of computer scientists from Alan Turing to Stuart Russell who have become a lot more worried about superintelligent AI immediately after giving the subject serious thought. It’s time to join them.

A smart thing to do if we’re worried about AI is to donate money to AI alignment research right now. We don’t know how far away superintelligent AI is, and we will probably think that it’s decades away until the last year or two; by then it will be quite late. Also, scientific research is something that builds on itself over time. A problem that takes 50 years for scientists to solve can’t usually be solved in 5 years with a tenfold budget.

There’s a good reason for Americans, in particular, to donate money before the end of 2017. The new tax plan raises the standard deduction to $12k/$24k and eliminates most itemized deductions. This means that you won’t get to deduct the first several thousand dollars of charity donations going forward, but you can in 2017. Whatever amount you were going to donate in 2018, it makes sense to donate it now and get a tax cut.

A non-suicidal civilization should be dealing with existential risk collectively, for example with a government-funded “AI safety Manhattan project” of our best scientists. We’re not there yet, and in the meantime, private donations by smart people need to carry the load. To get there it’s also important to spread the word, which is why I’m blogging about matching funds publicly instead of giving anonymously.

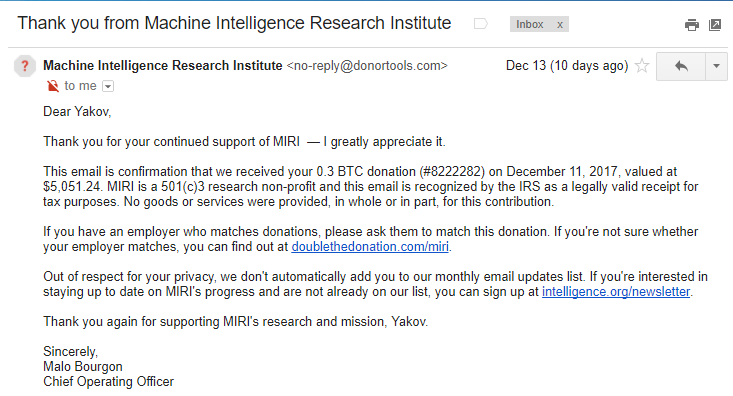

I am planning to donate to the Machine Intelligence Research Institute fundraiser because I’m reasonably certain that MIRI is doing important work on AI alignment. However, I’m not as certain that they’re the absolute best in the field. I encourage my readers to look at other organizations like the Future of Life Institute, the Center for Human-Compatible AI, the Berkeley Existential Risk Initiative, the Future of Humanity Institute at Oxford, and the Effective Altruism Far Future Fund. In my donation to MIRI, I will match the amount donated by my readers to any legitimate organization that deals with existential risk, up to 0.3 Bitcoins (currently $5,150).

I will go ahead with my donation on December 24th, so in the next two weeks please consider giving some money to ensure that humanity survives beyond our generation. Email me your donation receipt when you do, and I will happily give you credit on this blog. I will include or exclude according to your wishes any of the following information: your name, the amount donated, the organization you chose, and the reason for your donation.

Good Solstice, Merry Christmas, Hag Hannukah Sameah, Joyous Kwanzaa, and Happy Far Future to us all!

Donation update – my readers have donated over $35,000 and I matched over $5,000 of my own to MIRI!

Below are the names, amounts, and explanations from all the donors who wanted those details made public, some of us also made MIRI’s list of top contributors.

James Landis – 1 BTC. “Now is a really good time to donate Bitcoin to charity – it harnesses the greed of speculation and counterbalances all the unethical uses of the currency so far (cryptolocker being among the worst). It’s a great way to capture all of the capital gains without any of the tax penalty, too!”

Clark Gaebel – $12,000. “For a long time now (well before hearing about the AI-risk folk), I’ve considered unfriendly AI to be inevitable without intervention. As a fun exercise, spend 30 quiet seconds thinking of a way to design a super-intelligence which makes as many paperclips as possible, and isn’t dangerous. It’s hard!

Designing a general optimizer is hard. Once upon a time, I thought deep learning wasn’t good enough to get there. Complicated, deep, neural networks pattern-matched in my head to “overfitting”. But when AlphaZero pwned stockfish, I updated. It didn’t just beat humans at chess. It beat the best humans with the assistance of a computer at chess”

Kevin Fischer – $5000. “I’ve never donated to MIRI or any other non-profit in the AI alignment field before, but your article convinced me to do so this year.”

I have also received donation receipts from Triinu, Cliff, Matthijs, Keegan. and Eric. If any of the above want your details published, let me know.

If I forgot someone who donated let me know ASAP, and if you were partly inspired by my blog to donate you can email me your donation receipt and I’ll add you to the list as well.

Thank you all!

Thanks for replying. Couterpoints:

a) computational (inside view) – you estimate the cost of spending on climate change as 2-4% of GDP, but you should be looking at marginal costs minus marginal benefits.

To take a simplified example, assume the only thing we can do for pollution is raise the carbon tax, and we lose 1% of GDP for each dollar we raise it. We’re getting reduced existential risk, but we’re also getting lots of other benefits, like better public health and less traffic congestion. The total lost utility should be the (GDP loss)-(benefits), even without accounting for the X-risk.

This exchange rate gets worse the higher the carbon tax is – assuming no mass extinction risk, the break-even point is where the tax matches the social cost of carbon (about 31$ per ton according to here http://www.pnas.org/content/114/7/1518.full). The current best exchange rate is estimated at 0.81$ per ton for third world charities (https://www.givingwhatwecan.org/research/other-causes/climate-change/) and about three times that in America. So right now, we’d actually have to raise the carbon tax by 30$ just to reach the point where we’re losing any money on it at all, let alone until it’s computationally reasonable to just ignore the side benefits.

b) outside view: You point out that the bell curve for opinions on AI is skewed in terms of expertise. This also applies to climate change – climate change has a hippy movement that puts a lot of non-experts on the fringe, so the left end has a lot of non-experts, but if you ignore them there’s still a very clear expertise imbalance. The same argument for AI works for climate change.

LikeLike

I explicitly wrote about the cost net of benefits because I didn’t have a good estimate of the benefits, thanks for providing some info on that front.

With that settled, we seem to be in complete agreement: we both support a carbon tax up to the total negative externality (basic Econ 101), and we both agree that individual action / charity donation to fight climate change is pretty ineffectual. So let’s vote for a carbon tax and donate to MIRI :)

LikeLike

I wouldn’t say that individual action on climate change is ineffectual. If people are able through individual conservation efforts to reduce their energy use by say 10% (which is not that hard for the average American), not only does that reduce carbon emissions right away, it also significantly lowers the cost of replacing our power generation with solar/wind/nuclear and probably speeds up the process. It’s not a solution but it’s probably worth the effort.

LikeLike

The whiplash here is amazing. Do you acknowledge that somebody who’s at one of the ends of the climate change opinion curve (or at the opposite end of your position on the AI risk curve) would make a reasonableness function similar to the one you shone here or that someone in the middle of the AI risk opinion cure would make a reasonableness function similar to the one you made for global warming. For example, left to right on the AI opinion curve: “The only people worrying about AI safety are over imaginative nerds who like the Terminator much more then anyone should and who need to signal about how obviously smarter they are then anyone else,” “People who design AI will mostly want human friendly AI and human hostile AI will be discouraged so natural selection will take care of this,” “AI risk is a concern and work should be done on this but AI is saving people’s lives and can do so much more even in the near future so we shouldn’t let concerns over AI risk hinder the development of AI,” “AI will kill us all (haven’t you watched the Terminator) and we need to stop it now and never try to open this pandora’s box.” See, lots of dumb opinions on the edges of the curve and lots of smart opinions in the center. The center is where everyone should be.

It’s important to realize two things: 1) there isn’t a cause so noble that it doesn’t attract idiots and 2) any idea that has more then one person supporting it, has at-least one intelligent idea in support of it. There are smart and dumb opinions all along the length of any opinion curve. Cherry picking isn’t convincing to someone realizes that you’re doing so.

While I don’t think I “obsess” about light bulb efficiency, I do take that into consideration when purchasing new bulbs. I think that there should be a carbon tax and I think there should be government grants for AI risk research. I think that there are reasonable actions that I can take to reduce my carbon footprint while the less then $500 I can afford to donate each year to charity will have little overall effect on AI safety (I also see many more areas where the marginal effect of my charity dollar is grater then AI safety). I seam to disagree with you in that I think that, for me, individual action on climate change has more impact then individual action on AI risk research.

One last thing, I would caution you on is to remember the lesson on inflated bubbles. You are, admittedly, on the edge of opinion on AI safety. Now, just being on the edge of an opinion curve doesn’t mean that your opinion is wrong but it does mean that, if you’re not careful, you could alienate your natural allies and push them even further away.

Now you’ve tried to limit the discussion about this to the opinion curve and not to a discussion specifically about the merits of AI safety (or climate change) and I acknowledge that and I’ve made some effort to follow that example. I haven’t given my actual opinion on either here.

LikeLike

Where are they, the thousands of crazy geeks who think we should lock up all computer scientists just in case? Can you show me one position on the “worried” end of the AI spectrum that is held by a substantial number of people and yet easily refuted? When that actually happens, I’ll start worrying that the pendulum has swung too far. And humanity will be much safer.

We are not like kites blown about purely by the wind of consensus, we are thinking people who can learn about an issue ourselves and establish a baseline of sanity before looking to see where popular opinion lies. I learned about climate change a decade ago, I updated to think that a carbon tax and solar panels are good but “local food” and lightbulbs aren’t important, and now the median person has caught up. Then I learned about AI alignment, and as of 2017 the median person is not even aware that it’s a topic.

Other thinking people are following the same path, which is why educated opinions about climate change don’t seem to be changing much in recent years, while opinions about AI are rapidly accumulating on the worried side of the curve.

Now based on conservative numbers ($10M AI budget, 2% GDP for climate change), your $500 can be 1/20,000th of the annual AI safety research budget, or 1/1,000,000,000th of the economic effort put towards combating climate change. Are you really 50,000 times more effective directing your resources towards the latter?

LikeLike

Do you really think there aren’t stupid opinions on the worried end of the spectrum? There are stupid opinions for every prospective whether or not the prospective is correct. I do seriously question the rigorous method that was used to produce the opinion curves on global warming and AI (the same method was applied to both, right?). The “ban all AI” position should be taken at-least as seriously as the “die, humans” position.

I’m not arguing that a position on the end of the spectrum is wrong. I think the correctness of a position isn’t determined by it’s position on the opinion spectrum. You’ve said you want to talk about opinion curves so I’m talking about opinion curves. If you want to talk about the validity of your or other opinions then that can happen.

Also there are plenty of people who know more about AI then either you or I do and who aren’t as alarmist as you seam to be. Cherry picking aside, what evidence is there that opinions about AI safety are rapidly converging on your own?

Also, let’s take my statement that my charity money has higher marginal utility elsewhere and then refute it by comparing it to one specific elsewhere. If anything I should expect you to demonstrate that there exists absolutely no other way I could spend this $500 with a higher marginal utility but I won’t do that because that wouldn’t be fair.

I do think that the marginal utility of my money would be better spent providing the global poor with cash or clean drinking water (both are things, if I remember correctly, you specifically advocated for) or, say, malaria nets or computer equipment.

One last thing, just as changing my light bulbs in my home from less efficient to more efficient won’t make an appreciable impact on global warming, $500 won’t have an appreciable impact on AI safety. A large shift to efficient light bulbs would have an impact (something a carbon tax might do) just a lots of small donations in aggregate can have an impact on AI safety. Some consistency would be appreciated.

LikeLike