Basketballism

Imagine that tomorrow everyone on the planet forgets the concept of training basketball skills.

The next day everyone is as good at basketball as they were the previous day, but this talent is assumed to be fixed. No one expects their performance to change over time. No one teaches basketball, although many people continue to play the game for fun.

Geneticists explain that some people are born with better hand-eye coordination and are thus able to shoot a basketball accurately. Economists explain that highly-paid NBA players have a stronger incentive to hit shots, which explains their improved performance. Psychologists note that people who take more jump shots each day hit a higher percentage and theorize a principal factor of basketball affinity that influences both desire and skill at basketball. Critical theorists claim that white men’s under-representation in the NBA is due to systemic oppression.

Papers are published, tenure is awarded.

New scientific disciplines emerge and begin studying jump shots more systematically. Evolutionary physiologists point out that our ancestors threw stones more often than they tossed basketballs, which explains our lack of adaptation to that particular motion. Behavioral kinesiologists describe systematic biases in human basketball, such as the tendency to shoot balls with a flatter trajectory and a lower release point than is optimal.

When asked by aspiring basketball players if jump shots can be improved, they all shake their heads sadly and say that it is human nature to miss shots. A Nobel laureate behavioral kinesiologist tells audiences that even after he wrote a book on biases in basketball his shot did not improve much. Someone publishes a study showing that basketball performance improves after a one-hour training session with schoolchildren, but Shott Ballexander writes a critical takedown pointing out that the effect wore off after a month and could simply be random noise. The field switches to studying “nudges”: ways to design systems so that players hit more shots at the same level of skill. They recommend that the NBA adopt larger hoops.

Papers are published, tenure is awarded.

Then, one day, a woman sits down to read those papers who is not an academic, just someone looking to get good at basketball. She realizes that the lessons of behavioral kinesiology can be used to improve her jump shot, and practices releasing the ball at the top of her jump from above the forehead. Her economist friend reminds her to give the ball more arc. As the balls start swooshing in, more people gather at the gym to practice shooting. They call themselves Basketballists.

Most people who walk past the gym sneer at the Basketballists. “You call yourselves Basketballists and yet none of you shoots 100%”, they taunt. “You should go to grad school if you want to learn about jump shots.” Some of Basketballists themselves begin to doubt the project, especially since switching to the new shooting techniques lowers their performance at first. “Did you hear what the Center for Applied Basketball is charging for a training camp?” they mutter. “I bet it doesn’t even work.”

Within a few years, some dedicated Basketballists start talking about how much their shot percentage improved and the pick-up tournaments they won. Most people say it’s just selection bias, or dismiss them by asking why they can’t outplay Kawhi Leonard for all their training.

The Basketballists insist that the training does help, that they really get better by the day. But how could they know?

AsWrongAsEver

A core axiom of Rationality is that it is a skill that can be improved with time and practice. The very names Overcoming Bias and Less Wrong reflect this: rationality is a vector, not a fixed point.

A core foundation of Rationality is the research on heuristic and biases led by Daniel Kahneman. The very first book in The Sequences is in large part a summary of Kahneman’s work.

Awkwardly for Rationalists, Daniel Kahneman is hugely skeptical of any possible improvement in rationality, especially for whole groups of people. In an astonishing interview with Sam Harris, Kahneman describes bias after bias in human thinking, emotions, and decision making. For every one, Sam asks: how do we get better at this? And for every one, Daniel replies: we don’t, we’ve been telling people about this for decades and nothing has changed, that’s just how people are.

Daniel Kahneman is familiar with CFAR, but as far as I know he has not put as much effort himself into developing a community and curriculum dedicated to improving human rationality. He has discovered and described human irrationality, mostly to an audience of psychology undergrads. And psychology undergrads do worse than pigeons at learning a simple probabilistic game so we shouldn’t expect them to learn rationality just by reading about biases. Perhaps if they started reading Slate Star Codex…

Alas, Scott Alexander himself is quite skeptical of Rationalist self-improvement. He certainly believes that Rationalist thinking can help you make good predictions and occasionally distinguish truth from bullshit, but he’s unconvinced that the underlying ability can be improved upon. Scott is even more skeptical of Rationality’s use for life-optimization.

I told Scott that I credit Rationality with a lot of the massive improvements in my financial, social, romantic, and mental life that happened to coincide with my discovery of LessWrong. Scott argued that I would do equally well in the absence of Rationality by finding other self-improvement philosophies to pour my intelligence and motivation into and that these two are the root cause of my life getting better. Scott also seems to have been doing very well since he discovered LessWrong, but he credits Rationality with not much more than being a flag that united the community he’s part of.

So: on one side are Yudkowsky, CFAR, and several Rationalists, sharing the belief that Rationality is a learnable skill that can improve the lives of most seekers who step on the path. On the other side are Kahneman, Scott, several other Rationalists, and all anti-Rationalists, who disagree.

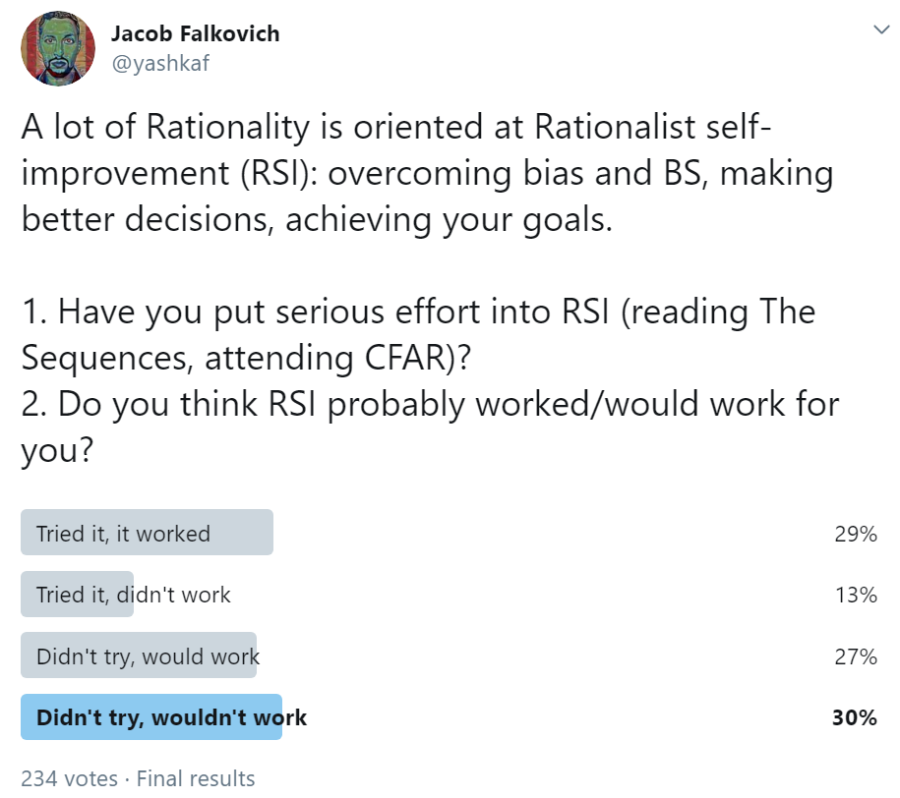

When I surveyed my Twitter followers, the results distributed somewhat predictably:

The optimistic take is that RSI works for most people if they only tried it. The neutral take is that people are good at trying self-improvement philosophies that would work for them. The pessimistic take is that Rationalists are deluded by sunk cost and confirmation bias.

Who’s right? Is Rationality trainable like jump shots or fixed like height? Before reaching any conclusions, let’s try to figure out how why so many smart people who are equally familiar with Rationality disagree so strongly about this important question.

Great Expectations

An important crux of disagreement between me and Scott is in the question of what counts as successful Rationalist self-improvement. We can both look at the same facts and come to very different conclusions regarding the utility of Rationality.

Here’s how Scott parses the fact that 15% of SSC readers who were referred by LessWrong have made over $1,000 by investing in cryptocurrency and 3% made over $100,000:

The first mention of Bitcoin on Less Wrong, a post called Making Money With Bitcoin, was in early 2011 – when it was worth 91 cents. Gwern predicted that it could someday be worth “upwards of $10,000 a bitcoin”. […]

This was the easiest test case of our “make good choices” ability that we could possibly have gotten, the one where a multiply-your-money-by-a-thousand-times opportunity basically fell out of the sky and hit our community on its collective head. So how did we do?

I would say we did mediocre. […]

Overall, if this was a test for us, I give the community a C and me personally an F. God arranged for the perfect opportunity to fall into our lap. We vaguely converged onto the right answer in an epistemic sense. And 3 – 15% of us, not including me, actually took advantage of it and got somewhat rich.

Here’s how I would describe it:

Of the 1289 people who were referred to SSC from LessWrong, two thirds are younger than 30, a third are students/interns or otherwise yet to start their careers, and many are for other reasons too broke for it to be actually rational to risk even $100 on something that you saw recommended on a blog. Of the remainder, the majority were not around in the early days when cryptocurrencies were discussed — the median “time in community” on LessWrong surveys is around two years. In any case, “invest in crypto” was never a major theme or universally endorsed in the Rationalist community.

Of those that were around and had the money to invest early enough, a lot lost it all when Mt. Gox was hacked or when Bitcoin crashed in late 2013 and didn’t recover until 2017 or through several other contingencies.

If I had to guess the percent of Rationalists who were even in a position to learn about crypto on LessWrong and make more than $1,000 by following Rationalist advice, I’d say it’s certainly less than 50%. Maybe not much larger than 15%.

Only 8% of Americans own cryptocurrency today. At the absolute highest end estimate, 1% of Americans, and 0.1% of people worldwide, made >$1,000 from crypto. So Rationalists did at least an order of magnitude better than the general population, almost as well as they could’ve done in a perfect world, and also funded MIRI and CFAR with Bitcoin for years ahead. I give the community an A and myself an A.

In an essay called Extreme Rationality: It’s Not That Great Scott writes:

Eliezer writes:

The novice goes astray and says, “The Art failed me.”

The master goes astray and says, “I failed my Art.”Yet one way to fail your Art is to expect more of it than it can deliver.

Scott means to say that Eliezer expects too much of the art in demanding that great Rationalist teachers be great at other things as well. But I think that expecting 50% of LessWrongers filling out a survey to have made thousands of dollars from crypto is setting the bar far higher than Eliezer’s criterion of “Being a math professor at a small university who has published a few original proofs, or a successful day trader who retired after five years to become an organic farmer, or a serial entrepreneur who lived through three failed startups before going back to a more ordinary job as a senior programmer.”

Akrasia

Scott blames the failure of Rationality to help primarily on akrasia.

One factor we have to once again come back to is akrasia. I find akrasia in myself and others to be the most important limiting factor to our success. Think of that phrase “limiting factor” formally, the way you’d think of the limiting reagent in chemistry. When there’s a limiting reagent, it doesn’t matter how much more of the other reagents you add, the reaction’s not going to make any more product. Rational decisions are practically useless without the willpower to carry them out. If our limiting reagent is willpower and not rationality, throwing truckloads of rationality into our brains isn’t going to increase success very much.

I take this paragraph to imply a model that looks like this:

[Alex reads LessWrong] -> [Alex tries to become less wrong] -> [akrasia] -> [Alex doesn’t improve].

I would make a small change to this model:

[Alex reads LessWrong] -> [akrasia] -> [Alex doesn’t try to become less wrong] -> [Alex doesn’t improve].

A lot of LessWrong is very fun to read, as is all of SlateStarCodex. A large number of people on these sites, as on Putanumonit, are just looking to procrastinate during the workday, not to change how their mind works. Only 7% of the people who were engaged enough to fill out the last LessWrong survey have attended a CFAR workshop. Only 20% ever wrote a post, which is some measure of active rather than passive engagement with the material.

In contrast, one person wrote a sequence on trying out applied rationality for 30 days straight: Xiaoyu “The Hammer” He. And he was quite satisfied with the result.

I’m not sure that Scott and I disagree much, but I didn’t get the sense that his essay was saying “just reading about this stuff doesn’t help, you have to actually try”. It also doesn’t explain was he was so skeptical about me crediting my own improvement to Rationality.

Akrasia is discussed a lot on LessWrong, and applied rationality has several tools that help with it. What works for me and my smart friends is not to try and generate willpower but to use lucid moments to design plans that take a lack of willpower into account. Other approaches work for other people. But of course, if someone lacks the willpower to even try and take Rationality improvement seriously, a mere blog post will not help them.

3% LessWrong

Scott also highlights the key sentence in his essay:

I think it may help me succeed in life a little, but I think the correlation between x-rationality and success is probably closer to 0.1 than to 1.

What he doesn’t ask himself is: how big is a correlation of 0.1?

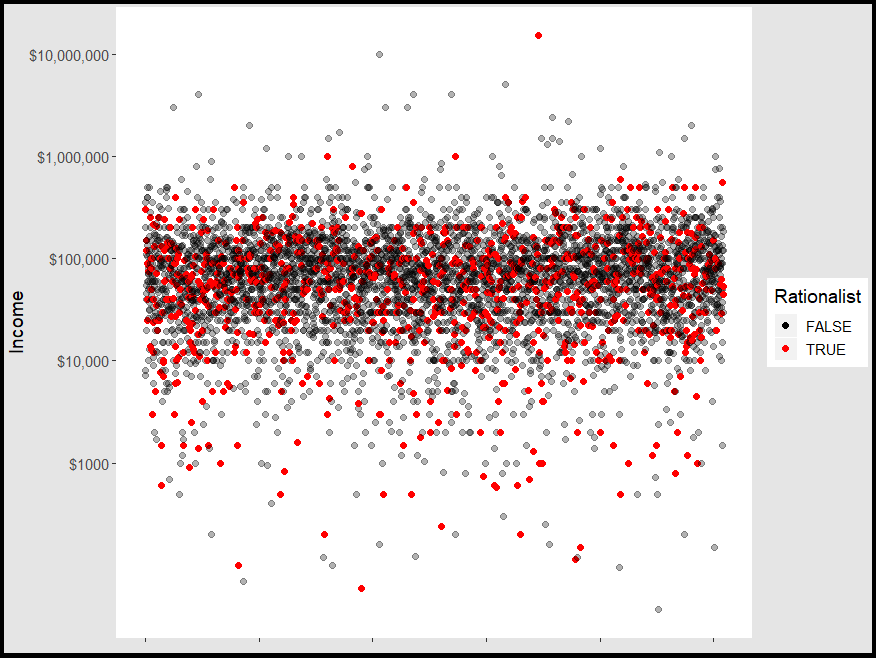

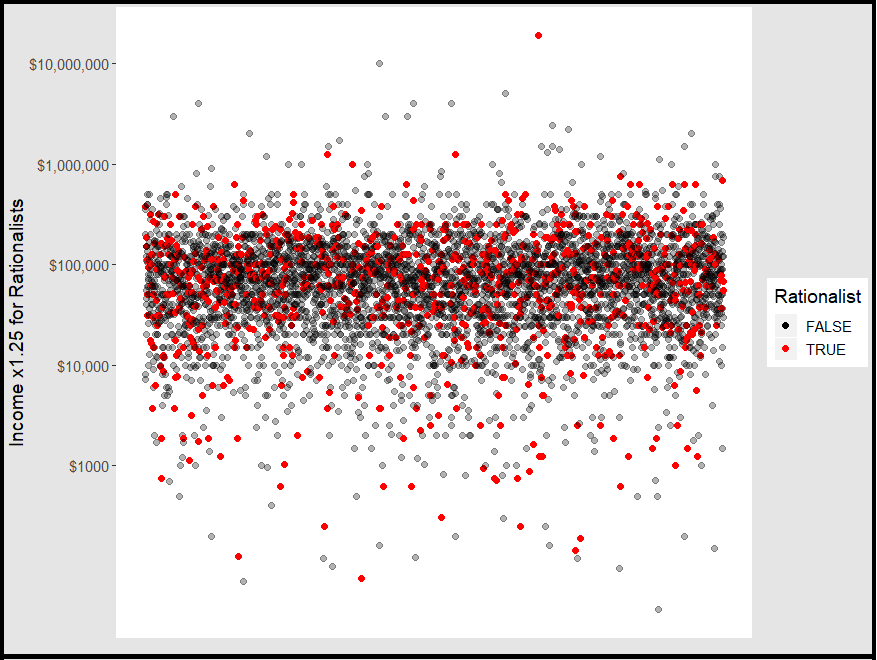

Here’s the chart of respondents to the SlateStarCodex survey, by self-reported yearly income and whether they were referred from LessWrong (Scott’s criterion for Rationalists).

And here’s the same chart with a small change. Can you notice it?

For the second chart, I increased the income of all rationalists by 25%.

The following things are both true:

- When you eyeball the group as a whole, the charts look identical. A 25% improvement for a quarter of the people in a group you observe is barely noticeable. The rich stayed rich, the poor stayed poor.

- If your own income increased 25% you would certainly notice it. And if the increase came as a result of reading a few blog posts and coming to a few meetups, you would tell everyone you know about this astounding life hack.

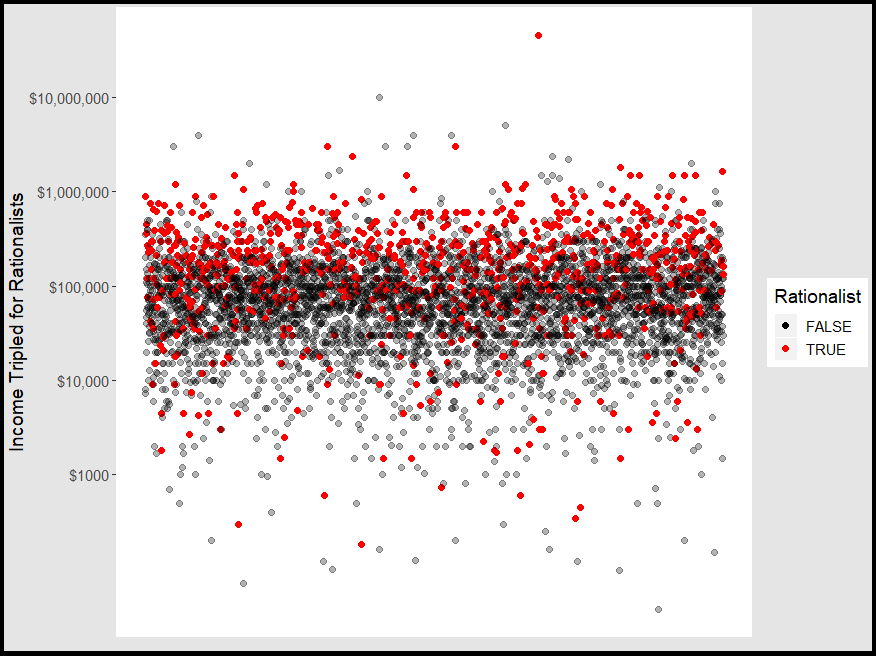

The correlation between Rationality and income in Scott’s survey is -0.01. That number goes up to a mere 0.02 after the increase. A correlation of 0.1 is absolutely huge, it would require tripling the income of all Rationalists.

The point isn’t to nitpick Scott’s choice of “correlation = 0.1” as a metaphor. But every measure of success we care about, like impact on the world or popularity or enlightenment, is probably distributed like income is on the survey. And so if Rationality made you 25% more successful it wouldn’t be as obviously visible as Scott thinks it would be — especially since everyone pursues a different vision of success. In this 25% world, the most and least successful people would still be such for reasons other than Rationality. And in this world, Rationality would be one of the most effective self-improvement approaches ever devised. 25% is a lot!

Of course, the 25% increase wouldn’t happen immediately. Most people who take Rationality seriously have been in the community for several years. You get to 25% improvement by getting 3% better each year for 8 years.

Here’s what 3% improvement feels like:

You know what feels crappy? 3% improvement. You busted your ass for a year, trying to get better at dating, at being less of an introvert, at self-soothing your anxiety – and you only managed to get 3% better at it.

If you worked a job where you put in that much time at the office and they gave you a measly 3% raise, you would spit in your boss’s face and walk the fuck out.

And, in fact, that’s what most people do: quit. […]

The model for most self-improvement is usually this:

- You don’t have much of a problem

- You found The Breakthrough that erased all the issues you had

- When you’re done, you’ll be the opposite of what you were. Used to be bad at dating? Now you’ll have your own personal harem. Used to be useless at small talk? Now you’re a fluent raconteur.

Which, when you’ve agonized to scrape together a measly 3% improvement, feels like crap. If you’re burdened with such social anxiety that it takes literally everything you have to go out in public for twenty minutes, make one awkward small talk, and then retreat home to collapse in embarrassment, you think, “Well, this isn’t worth it.”

But most self-improvement isn’t immediate improvement, my friend.

It’s compound interest.

I think that Rationalist self-improvement is like this. You don’t get better at life and rationality after taking one class with Prof. Kahnemann. After 8 years of hard work, you don’t stand out from the crowd even as the results become personally noticeable. And if you discover Rationality in college and stick with it, by the time you’re 55 you will be three times better than what you would have been if you hadn’t compounded these 3% gains year after year, and everyone will notice that.

What’s more, the outcomes don’t scale smoothly with your level of skill. When rare, high leverage opportunities come around, being slightly more rational can make a huge difference. Bitcoin was one such opportunity; meeting my wife was another such one for me. I don’t know what the next one will be: an emerging technology startup? a political upheaval? cryonics? I know that the world is getting weirder faster, and the payouts to Rationality are going to increase commensurately.

Here’s what Scott himself wrote in response to a critic of Bayesianism:

Probability theory in general, and Bayesianism in particular, provide a coherent philosophical foundation for not being an idiot.

Now in general, people don’t need coherent philosophical foundations for anything they do. They don’t need grammar to speak a language, they don’t need classical physics to hit a baseball, and they don’t need probability theory to make good decisions. This is why I find all the “But probability theory isn’t that useful in everyday life!” complaining so vacuous.

“Everyday life” means “inside your comfort zone”. You don’t need theory inside your comfort zone, because you already navigate it effortlessly. But sometimes you find that the inside of your comfort zone isn’t so comfortable after all (my go-to grammatical example is answering the phone “Scott? Yes, this is him.”) Other times you want to leave your comfort zone, by for example speaking a foreign language or creating a conlang.

When David says that “You can’t possibly be an atheist because…” doesn’t count because it’s an edge case, I respond that it’s exactly the sort of thing that should count because it’s people trying to actually think about an issue outside their comfort zone which they can’t handle on intuition alone. It turns out when most people try this they fail miserably. If you are the sort of person who likes to deal with complicated philosophical problems outside the comfortable area where you can rely on instinct – and politics, religion, philosophy, and charity all fall in that area – then it’s really nice to have an epistemology that doesn’t suck.

If you’re the sort of person for whom success in life means stepping outside the comfort zone that your parents and high school counselor charted out for you, if you’re willing to explore spaces of consciousness and relationships that other people warn you about, if you compare yourself only to who you were yesterday and not to who someone else is today… If you’re weird like me, and if you’re reading this you probably are, I think that Rationality can improve your life a lot.

But to get better at basketball, you have to actually show up to the gym.

See also: The Martial Art of Rationality.

I think training rationality is more akin to training ‘throwing’ than it is to training basketball. Is there a general art of throwing that you could train to improve your ability to shoot a basketball, throw a football, hurl a discus, and toss a heavy rock over a barrier? Probably so; there might be some consistencies in body mechanics that would carry over to many varieties of throwing. However, you’re probably going to be better off identifying some specific things you want to throw and spending your time training with those.

Similarly, I don’t think it’s clear that there’s a general art of rationality (systematized winning) you can train that will produce better results than simply training a few specific areas where you would like to win.

LikeLike

At this point, I have mixed feelings about rationality and RSI. My best generic recommendation for a newcomer would be to spend between 200 and 400 hours on reading the core work and befriending compatible people, but without expecting too much from the organizations or the entire community. Insight porn is great, so are deep discussions concerning important topics, but I still agree with Scott that rationality has little practical utility if you’re already smart and have good intuitions or domain-specific knowledge. It’s easy to indulge in unrealistic expectations of a major self-transformation and joining a movement that strives to save the humanity, and then get resentful by spending lots of time on something that is “just” kind of interesting and maybe occasionally helpful.

Speaking of the specific things you mentioned: noticing the early Bitcoin rush should probably be ascribed to the broader group of “early tech adopters”. While CFAR started on good premises and formalized interesting techniques, it didn’t seem to achieve much in the last few years: they’re still at the stage of offering $3,900 workshops with little internal progress, no serious academic/research collaboration on applied rationality, and citing one limited 2015 study noting a minor (0.17σ) increase in life satisfaction after one year. Despite the fact that most CFAR instructors and alumni are reasonable and friendly people, the adjacent circles struggle with cultish vibes and recurring controversies. With all due respect to a minor group of outliers for whom polyamory is a good long-term fit, the forced mainstream adoption of this model is mostly a symptom of collapsing gender relations. Polyamory, with its roots in covert female supremacy, is particularly easy to push in communities with a skewed male-to-female ratio involving vulnerable but industrious nerds. If you don’t believe me, the other commentators on your blog or non-conservative researchers, check out the responses to Geoffrey’s recent article on polyamory – depending on your political inclinations, either on Quillette or the Woke Twitter. As for akrasia, it seems to be used as an umbrella term covering a general lack of energy and clarity that may result from depression/burnout/overworking, an inability to squeeze more agency from the brain that consumes more “willpower points” on overthinking/double-checking for biases, and the acute awareness of the grim side of reality. Unfortunately, it is my impression that rationality makes you much more frustrated with the uncaring and uncertain nature of dynamics governing the universe, our society, or its institutions. Hidden trade-offs like this one are everywhere.

What seems most interesting right now is the actual impact of this counterfactual and accumulated 3% rate of rationality-related improvement, as explained by real-life examples. If replacing one tablespoon of sugar in your daily hot chocolate with consuming 10 nuts was an equivalent of +3% to your health stats, would it really visibly improve your life experience in the next decade or two? If you were a 5’6″ rather than a 5’5″ Indian guy, would it really make you more successful in the workplace or while dating? I’m afraid that a broader context beyond the rationalist’s control makes this 3% rate irrelevant to obtaining benefits like in case of compounding investments, but I would love to be proven wrong.

LikeLike

For me personally? A rationalistish approach made my hair much better-looking (although I hadn’t actually encountered rationalism when I started working on it). Rationalism definitely strongly contributed to me finding a skincare routine that improved my skin in the short term, and I’m interested in the long-term effects. I’ve also always looked at cooking from what I would consider a more-rationalist-than-most perspective, and I’m a great cook. I don’t really identify with rationalism, but I’ve definitely seen self-improvement from it.

LikeLike

I think COVID-19 has been another one. Many rats seem to have taken it seriously back in Jan/Feb.

Wei Dai made some money shorting the stock market.

LikeLiked by 1 person

I love basketball .I play basketball for the Maypearl panthers.Can y’all leave a comment on my blog it is called basketballisms.

LikeLiked by 1 person

While I agree with your general conclusion of “Lesswrong/Rationality/SSC help people be more rational”, I don’t fully buy the reasoning which get’s you there.

The fact that SSC readers who come from LessWrong are X% better at various things does not mean that it was LessWrong that made them better. As always. correlation does not equal causation. There’s likely a very strong selection bias. Namely that people who frequent a form/community which is highly intellectual, abstract and focused on self improvement are likely to be more rational/intelligent/successful than those who don’t.

LikeLike